Building an MCP Hub That Doesn't Drown Your Agent

Building an MCP Hub That Doesn't Drown Your Agent

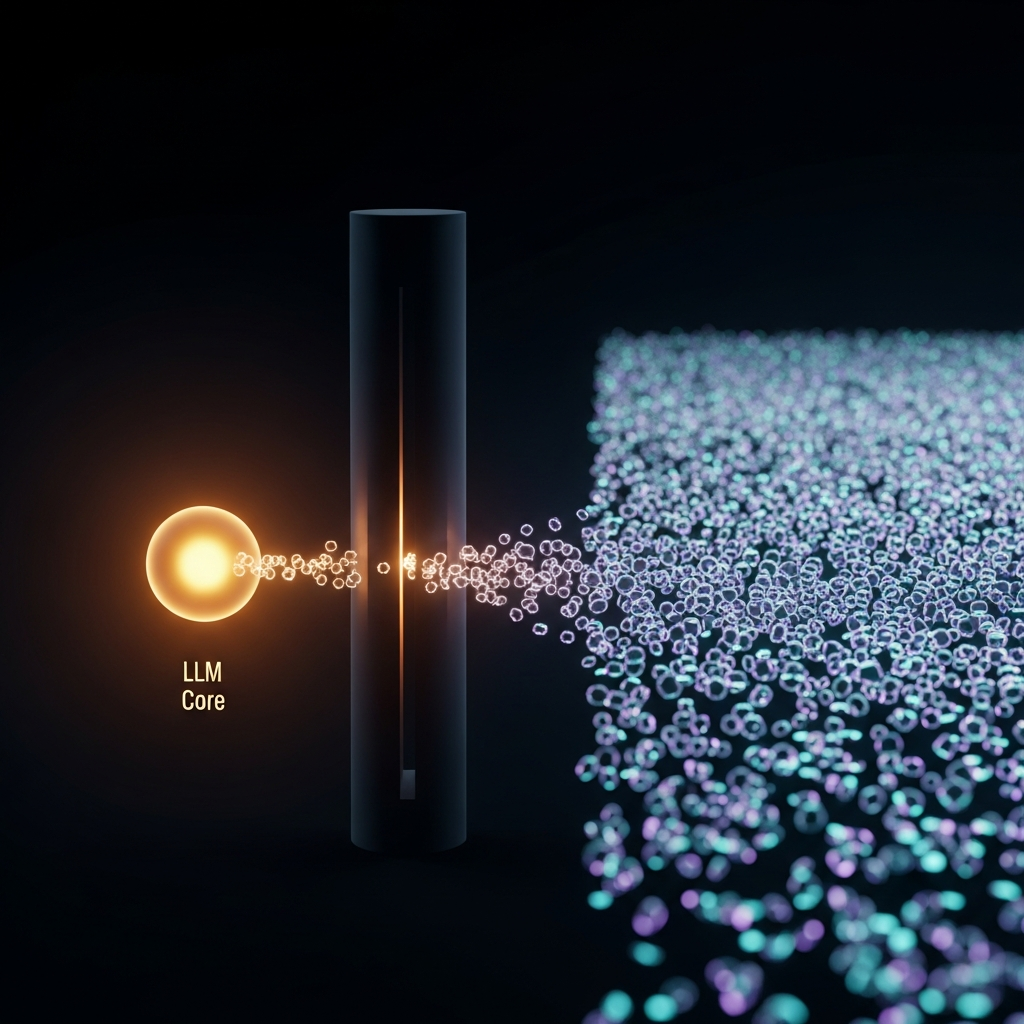

When the Model Context Protocol started landing inside every shiny chat client, I loved the idea of plugging my ragtag local tools into Claude Desktop or Codex without a bespoke integration. What I didn’t love was watching each agent burn a third of its context window just loading tool manifests it might never call. The more plugins I bolted on, the worse the latency, and the harder it became to experiment with smaller local models that already run tight on tokens. The MCP hub is my fix: a containerized FastAPI service that exposes a microscopic MCP surface (search_tools, describe_tool, invoke_tool) and loads the rest on demand.

The context tax problem

Every agent session felt like bringing the entire toolbox to the bench just in case. Most runs touch three or four tools, yet we hand the model dozens of JSON schemas before it writes the first plan. On small local models the verbosity alone can top out the window, and even on Claude 3.5 it slows reasoning because the planner has to weigh every option. I wanted a way to keep discovery available without preloading everything.

Three gateway tools instead of fifty manifests

The hub ships only three MCP tools. search_tools uses fuzzy matching so the agent can find candidates by keyword, describe_tool pulls the JSON schema for the exact tool it wants, and invoke_tool proxies the call. That means tool definitions live in Python provider modules inside the hub. Agents see a single list of gateway tools, then fetch specifics only when they’re needed. The pattern mirrors how developers lazily import modules, so we keep the interface thin yet expose full capability when the moment arrives.

Containerized, debuggable, and boring on purpose

The stack stays intentionally simple: Python 3.11, FastAPI, and an in-memory registry of demo tools that can be swapped out for real APIs. You can run it via poetry run uvicorn ... in development, hit REST mirrors like POST /search_tools for quick smoke tests, or attach MCP clients through the Streamable HTTP endpoint at /stream. Because the service is container ready, pointing Claude Desktop at mcp-proxy --transport=streamablehttp http://127.0.0.1:7001/stream/ just works.

Why it matters for agentic workflows

Local-first automations benefit the most. If you’re experimenting with Qwen, Phi, or any other sub-10B model, you need strict token discipline. Offloading discovery into the hub means the agent keeps its context for actual reasoning instead of bureaucracy. It also becomes easier to audit tool usage because every call flows through the same gateway, which is perfect for logging, metrics, or enforcing permissions when you hand the stack to teammates.

Where the hub goes next

There’s plenty left to build: semantic search for better recall, persisted registries so tools can be hot-loaded, and per-agent permissions for sensitive operations. The big idea, though, is already live: decouple tool discovery from execution so we stop wasting context on manifests nobody reads. In a follow-up I’ll cover how Anthropic’s new “code execution with MCP” release pushes this concept even further.